Associate AI Engineer

Associate AI Engineers are students or working professionals who have the necessary skills & knowledge to start working on an AI Project. They are not required to display the relevance of their technical skills to business. Rather they are deemed to be proficient in the required technical skills to start working for a business organization that has some data capabilities.

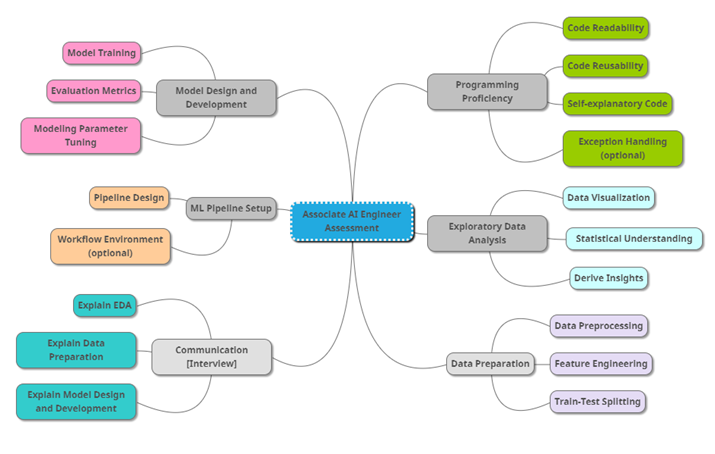

Assessment Rubrics forAssociate AI Engineer

1. Programming Proficiency Assessment

Code Readability

Code Reusability

Self Explanatory Code

Exception Handling

Code Readability

- Code is organized in a clean and readable format

- Code layout style, such as indentation and maximum line length, is consistent with industry conventions such as PEP8 (https://www.python.org/dev/peps/pep-0008)

Code Reusability

- Code is structured to minimize repeated code (e.g. using Functions)

Self Explanatory Code

- Proper naming conventions for variables, functions, classes

- Include documentation with in-line comments in source code

- Intent of Functions and Classes stated clearly

Exception Handling

- Include codes to catch and handle exceptions, such as insufficient data, empty dataset, wrong data type etc

2. Exploratory Data Analysis Assessment

Data Visualization

Statistical Understanding

Derive Insights

Data Visualization

- Use appropriate visualization tools to generate plots to show the relationship between variables

Statistical Understanding

- Generate and provide good explanation of descriptive statistics (mean, median, mode, standard deviation, and variance)

- Include analysis between variables

Derive Insights

- Extract and explain insights from the EDA

- Link insights into a coherent story

- Engineer features based on the insights drawn

3. Data Preparation Assessment

Data Preprocessing

Feature Engineering

Train-test - validate splitting

Data Preprocessing

- Perform Missing Data Analysis and take care of missing data appropriately by either removing data records with missing values or perform data imputation

- Perform Outliers Analysis and take care of outliers appropriately by either removing outliers records or replace outliers data

- Perform data investigation to check for erroneous data and perform appropriate data preprocessing to correct erroneous data

Feature Engineering

- Perform basic transformations for data; e.g.for numerical data, perform mathematical transformations, binning into categories, etc; e.g. for string data, perform string replacement, extract substring, concatenate multiple strings, etc

- Perform basic feature engineering to improve model accuracy

Train-test - validate splitting

- Perform basic train-test-validate data split for model building to ensure the final model is not overfitted and model testing is unbiased

4. Model Design and Development Assessment

Problem Scoping

Model Training

Evaluation Metrics

Modeling Parameter Tuning

Problem Scoping

- Able to define modeling objective based on problem statement

- Able to list down appropriate assumptions and considerations related to the problem scoping

Model Training

- Display good conceptual understanding of ML algorithms and models

- Built an appropriate model for the task using major ML framework

Evaluation Metrics

- Evaluates model performance using suitable metrics

- Able to explain the core concept for model selection, such as the trade-off between Variance vs Bias

Modeling Parameter Tuning

- Takes into account different modelling parameters and architectures for comparison

5. ML Pipeline Setup Assessment

Pipeline Design

Workflow Environment

Pipeline Design

- Design modular pipeline to ingest data, perform data cleaning, data transformation, train models, generate evaluation metrics and make inference

- Automate pipeline workflow

- Functional pipeline runs successfully end-to-end

- Include a README file to describe how to run the pipeline

Workflow Environment

- Set up ML workflow environment and file structure to facilitate pipeline

- Include library versioning requirements file, e.g. “requirements.py” or “conda.yml” depending on the setup option

6. Communication [During Interview]

Explain EDA

Explain Data Preparation

Explain Model Design

Explaining Pipeline Workflow

Explain EDA

- Able to draw insights using EDA

- Able to collate insights into a coherent story and articulate it clearly

Explain Data Preparation

- Able to justify the methods used for imputing missing values, correcting erroneous data, or handling outliers

- Able to show how the new features help with model improvement

- Able to explain the rationale of train-test-validate data split strategy adopted

Explain Model Design

- Able to explain how the solution addresses the problem statement

- Able to clearly articulate the benefits and drawbacks of the ML solution

Explaining Pipeline Workflow

- Able to explain how the workflow is designed

- Able to explain how to embed the evaluation of the solution developed in the workflow

- Able to identify and address blockers that may arise in the workflow (data curation, model training and inferencing, deployment)

Downloads

CAIE Handbook

Sample Technical Test